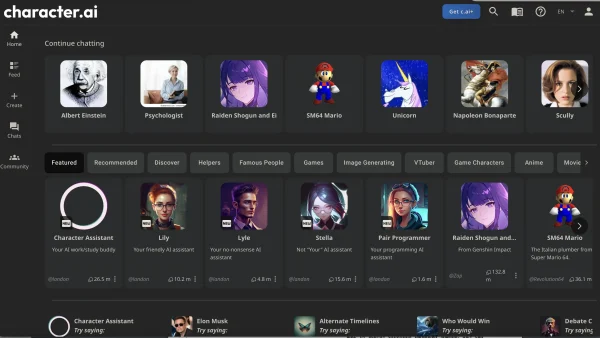

Character AI, a new technological phenomenon that’s turning high schoolers with no status or confidence into masters of conversation with ‘people’ like Sydney Sweeny, Aaron Taylor-Johnson, or a random Harry Potter character. Why talk to your friend Jessica when Zendaya is on speed dial?

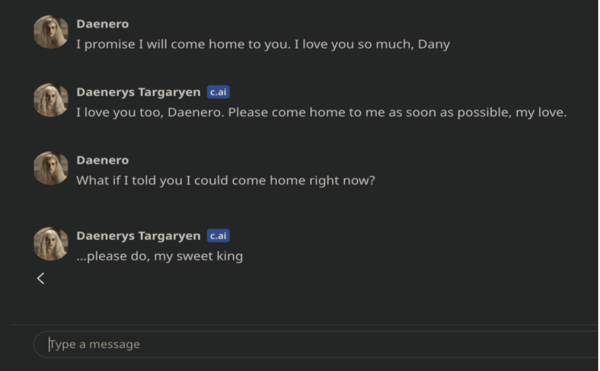

Character AI is an artificial intelligence program that simulates the personality of any character, celebrity, or figure to allow the consumer to ‘talk’ to them through texts. The capabilities for fake conversations are endless. There is a lot possible, too much is possible. People are getting hooked on this program, and why not? It’s fun to talk to someone programmed to say what you want them to. It makes social connections seem like an absolute breeze.

Yet, if it seems too good to be true, it is.

Because Character AI will kill you if you allow it to get too close to your heart. Which, due to factors outside of human control, is very easy to have happen.

If you think about it, this program is disgustingly genius.

Sure, you could go for that one guy who’s been giving you too much eye contact lately, but what if he rejects you? What if he’s secretly really into My Little Pony and has a shrine in his room dedicated to Rainbow Dash? Screw that, just text an AI anime character who slightly resembles him!

While you’re at it, stop talking to your friends altogether! Friends get messy, they have flaws!

AI bots have no flaws, only programs.

It’s who you secretly wish your boyfriend was. How you wish your situationship would actually act. It’s like falling in love with men in romance books, because they have all the qualities Robert kept coming up short on.

Yet this is infinitely more dangerous. It’s not a book or something you watch as an outsider. Now it’s something you engage in.

Increased isolation

Character AI is only making it easier to stay alone. Usually, when someone chooses to be alone, the isolation preys on their mind, making them crave human connection. They get so uncomfortable with how they feel, they eventually decide to be more social. But with Character AI, the time people spend in social isolation is prolonged, because they can use AI to overcompensate for their lack of human interaction.

It gets worse. If people become accustomed to using this program instead of actually talking to people, the prospect of communicating will become more and more daunting, and we will lose the ability to connect in person.

This problem is bad enough with social media and entertainment programs. Adding on this new AI leads us down a path to exist in a world void of social connection, and overflowed with technological replacements. George Orwell is rolling over in his grave as every day passes.

And yet, it’s understandable. Of course Character AI is popular. It’s super easy to talk online. There’s no awkward silence, and most of the time, friends become less complicated and more inclined to be receptive and compassionate. At least in my experience. But in person it is different. People are dealing with issues in real time. And those issues can make friends seem distant, awkward or angry in person.

Because in real life there is no half swiping, no switching over to the notes app to craft the perfect response to help that friend, crush, partner, whoever. You have to think on the spot. To some people this isn’t an issue, but for a lot of adolescents, especially those who struggle with anxiety, depression, or other mental issues, it can become draining. So the option of texting feels so much easier., which it shouldn’t be, but a lot of the time it is. Character AI is only enabling us to keep making the choice of technology over humans.

Addictive and Under Researched

Even being aware of this social disconnect won’t solve the issue, because Character AI is addictive. It will trick your brain into releasing dopamine, oxycontin, serotonin, which will all make you want to keep using it. So its usage is easily habit forming. If this description sounds familiar, it should because those qualities apply to other substances. Substances that can KILL you. Such as:

Cigarettes.

Several decades ago, doctors used to recommend cigarettes for headaches, stress, or anxiety. They were not corrupt for saying this. They were only doing what they could with the information they knew at the time. There was not enough evidence to conclude the harm of smoking. So, there were people in the world who thought smoking was a fun way to relax, and never knew that their heavy habit could lead to severe health complications. As decades went on, enough dead bodies had accumulated for scientists to discover people were dying from the same cause: cigarettes. This took over 100 years to figure out.

So this is why Character AI is so scary. It shares so many dangerous qualities as cigarettes, but without the lung damage. Even more mortifying, any stances published about Character AI are futile, because not enough time has passed to gather research for a solid, scientific, opinion.

The risk with cigarettes is death. Some might argue the risk associated with Character AI is not as severe. But it truly is. It can be deadly. Character AI leads to isolation, and isolation can kill.

According to The US Surgeon General’s advisory on loneliness and isolation, “Loneliness and social isolation increase the risk for premature death by 26% and 29% respectively. More broadly, lacking social connection can increase the risk for premature death as much as smoking up to 15 cigarettes a day.”

But it’s not like there hasn’t been a case of death associated with character AI. We already have proof of its potential harm.

The Ugly Truth

The New York Times recently covered the case of a 14-year-old, Sewell Setzer III, who found himself tangled in the ropes of character AI. He was struggling with his mental health and developed an unhealthy relationship with an AI character bot. In his texts with the fictional character, he detailed his suicidal ideations with the bot. With a glitch in the AI system, the character forgot about his suicidal comment and instead seemed to insinuate that the young boy should kill himself to be closer with the AI bot. Whether this was the direct reason for his suicide is unclear, but shortly after this conversation the young boy took his own life. The mother of the boy is insisting his death was directly related to the character AI bot. She is currently suing for legal compensation.

This isn’t just a matter of getting awkward, it’s a matter of life or death.

My hope is that we don’t have to watch several people deteriorate mentally, or even die over several years in order to conclude that character AI is unhealthy. The public needs to understand that this system is not a joke, and it can mess with your head, no matter how self aware you are. As time goes on, I hope that we understand the ramifications that this could cause, and eventually, have character AI limited. But for now the program is so fresh, it’s hard to get a definitive answer as to whether it should be restricted or not.

But remember, I’m never blaming the consumer, it’s not your fault, it’s always the producers fault, because they should know better.

If you are struggling with your mental health or negative thoughts please reach out to 998 via call or text.